Article

Spring Data MongoDB reactive performance tip

Improve the insert performance of Spring Data MongoDB reactive repositories with a simple tip.

Table of contents

Introduction

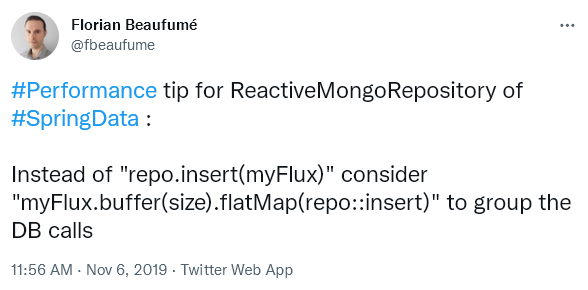

A while ago I shared this tip to improve insert performances with Spring Data MongoDB in reactive applications:

This article gives proper explanations for that performance tip.

Default reactive insertion

Spring Boot, MongoDB and Reactor are a good match to write scalable applications, but there is a gotcha when inserting data. In a Spring Boot reactive application using MongoDB, a typical repository component is:

public interface ItemRepository extends ReactiveMongoRepository<Item, String> {

// Other methods, as needed

}To insert a List of business items in the database, the insert(Iterable<S> entities) repository method works as

intended, a single network call is used for all inserted data. But to insert a Flux of items, it's a bit more

complicated since it is a reactive type that represents an asynchronous succession of elements. At first, the

insert(org.reactivestreams.Publisher<S> entities) repository method seems to be a good match:

Flux<Item> itemFlux = ...

itemRepository.insert(itemFlux);But if we monitor the database calls, we see that the items are inserted one by one. We enable MongoDB request logging

by changing the right log category, for example in the application.properties:

logging.level.org.springframework.data.mongodb.core.ReactiveMongoTemplate=debugWhen the code is executed to insert a Flux of (for example) 6 items, the logs show:

19:22:18.890 DEBUG [ctor-http-nio-3] o.s.d.m.core.ReactiveMongoTemplate : Inserting Document containing fields: [date, _class] in collection: item

19:22:18.892 DEBUG [ctor-http-nio-3] o.s.d.m.core.ReactiveMongoTemplate : Inserting Document containing fields: [date, _class] in collection: item

19:22:18.893 DEBUG [ctor-http-nio-3] o.s.d.m.core.ReactiveMongoTemplate : Inserting Document containing fields: [date, _class] in collection: item

19:22:18.893 DEBUG [ctor-http-nio-3] o.s.d.m.core.ReactiveMongoTemplate : Inserting Document containing fields: [date, _class] in collection: item

19:22:18.893 DEBUG [ctor-http-nio-3] o.s.d.m.core.ReactiveMongoTemplate : Inserting Document containing fields: [date, _class] in collection: item

19:22:18.894 DEBUG [ctor-http-nio-3] o.s.d.m.core.ReactiveMongoTemplate : Inserting Document containing fields: [date, _class] in collection: itemThis behavior can also be seen in the sources of the repository implementation class SimpleReactiveMongoRepository

from Spring Data. The insert(T objectToSave, String collectionName) method is executed for each item of the Flux:

@Override

public <S extends T> Flux<S> insert(Publisher<S> entities) {

Assert.notNull(entities, "The given Publisher of entities must not be null!");

return Flux.from(entities).flatMap(entity -> mongoOperations.insert(entity, entityInformation.getCollectionName()));

}Note that some other methods from SimpleReactiveMongoRepository with a Publisher parameter also use one database

call per persistent item. The optimization described in the next section can be applied accordingly to these methods.

Optimized insertion

In some cases inserting the items one by one is not an issue, for example if the items in the Flux are

emitted with some delay between each element.

But sometimes we prefer grouping the database calls. To do so, we can simply combine the buffer(int)

operator of Reactor with the insert(Iterable<S> entities) repository method:

Flux<Item> itemFlux = ...

itemFlux.buffer(3).flatMap(itemRepository::insert);With a batch size of 3, the logs are now:

19:37:24.445 DEBUG [ctor-http-nio-3] o.s.d.m.core.ReactiveMongoTemplate : Inserting list of Documents containing 3 items

19:37:24.446 DEBUG [ctor-http-nio-3] o.s.d.m.core.ReactiveMongoTemplate : Inserting list of Documents containing 3 itemsThe insertions are now correctly grouped. For small volumes of data, the performance difference may not be noticeable. But for larger volumes it can make a difference.

Performance measures

Let's measure the performances of these strategies. I used a remote MongoDB (thanks to the free plan of Clever Cloud MongoDB hosting) to emphasize the performance difference due to the network latency. But in my case the ping between the application and the database was only 16 ms. The database used a replica set of 2 instances. The MongoDB client connection pool min and max were set to 100. The ReactiveMongoTemplate logs were temporarily disabled.

| Strategy | 100 items | 1000 items | 10000 items |

|---|---|---|---|

| Default | 30 ms | 200 ms | 1900 ms |

| Optimized with batch size of 1 | 30 ms | 200 ms | 1900 ms |

| Optimized with batch size of 10 | 24 ms | 34 ms | 210 ms |

| Optimized with batch size of 100 | 24 ms | 30 ms | 80 ms |

As expected, the optimized insertion with a batch size of 1 has the same duration than the default insertion, since they execute similar database calls.

With larger batch sizes we see performance benefits. The benefits are more important for higher number of items. We also see some diminishing returns: for 10000 items, batch size increase from 1 to 10 gives a x9 speed increase, but 10 to 100 gives only a x2.6 speed increase.

If you are not familiar with reactive programming, you may be surprised how fast the default insertion is. Only 200 ms for 1000 consecutive insertions: one insertion takes an average duration of 0.2 ms, way bellow the ping (16 ms). How? Simply because insertions are executed asynchronously, no need to wait for an insertion to complete before executing the next one. This maximizes the connection pool usage and reduces the total duration.

Conclusion

We saw that some persistence methods of Spring Data MongoDB reactive repositories may not bring ideal performances. We described a solution to improve this, and measured the performance differences.

A sample project is available in GitHub, see spring-data-mongodb-reactive-insertion. The project README describes how to configure and run the application.